Introduction

Blind and low vision (BLV) individuals often require assistance when navigating through the world. In order to promote independent navigation for BLV individuals, prior work has leveraged existing technologies to create navigation assistance tools. These usually take the form of mobile apps and other mobile-based technologies. Although technologies such as GPS have been leveraged for outdoor navigation assistance, we identified a need for assistive technologies that additionally help within indoor settings.

To address this, I took the lead role in developing ASSIST (an acronym for "Assistive Sensor Solutions for Independent and Safe Travel"). ASSIST was an indoor mobile navigation system that provided highly accurate positioning and navigation instructions for BLV users. ASSIST was unique in that it used a hybrid positioning technique that used both Bluetooth Low Energy (BLE) beacons and an augmented reality (AR) framework (in our case, the now-defunct Google Tango) to provide centimeter-level positioning.

I was involved in this project for almost three years — from its conception during my sophomore year through graduation — as part of my work with the City College Visual Computing Laboratory (CCVCL). We did much of our testing at Lighthouse Guild, a vision rehabilitation and advocacy organization in New York City.

What is ASSIST?

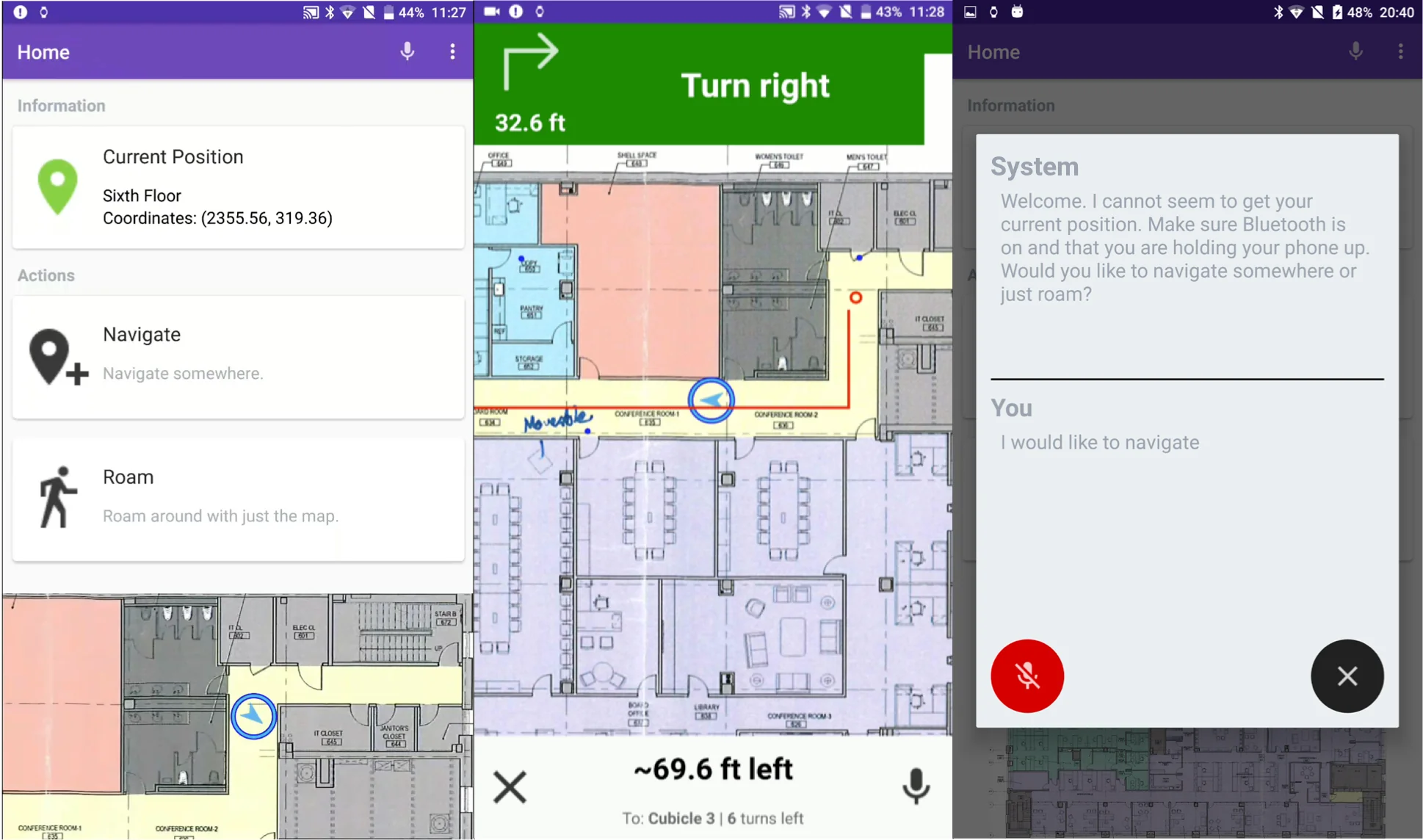

ASSIST was centered around an Android mobile application that relied on BLE beacons and Google Tango to provide turn-by-turn directions to BLV users within indoor environments. In addition to turn-by-turn navigation, we experimented with additional components to provide situational and environmental awareness, including:

- Object recognition by using a wearable camera to provide reliable alerting of dynamic situational elements (such as people within the user's surroundings), and

- Semantic recognition by using map annotations to alert users of static environmental characteristics.

The app also featured multimodal feedback, including a visual interface (useful for users who have some level of vision), voice input and feedback, and vibration reminders. Users could customize the level of feedback provided by each mechanism based on their needs. For example, users could toggle between steps, meters, and feet for distance measurements as well as between visual, audio, and vibration feedback depending on their preferences.

Screenshots from the ASSIST mobile application. From left to right: (a) home screen, (b) navigation interface, and (c) voice engine interface.

Hybrid Positioning

ASSIST was notable in that it used both BLE beacons and Google Tango to calculate a user's current position. Existing approaches at the time mainly used BLE beacons by themselves to localize; however, through our internal tests, we found that BLE beacon signals tended to be very noisy — preventing precise positioning but sufficient for calculating a rough, or coarse, location.

A Google Tango-enabled device contains an RGB-D camera, which allows the device to estimate its pose (position and orientation within a 3D environment), without the use of GPS or other external signals. Tango stores a map of the current environment within an area description file (ADF), a feature map of an indoor environment that would allow it to be re-localized within that environment.

We found, however, that Tango ADFs are limited in size. In a large floor of a building, only part of it could be mapped before the ADF became too large and caused the system to crash. Yet, as Tango-based positioning is extremely accurate, it was excellent for calculating a precise, or fine, location.

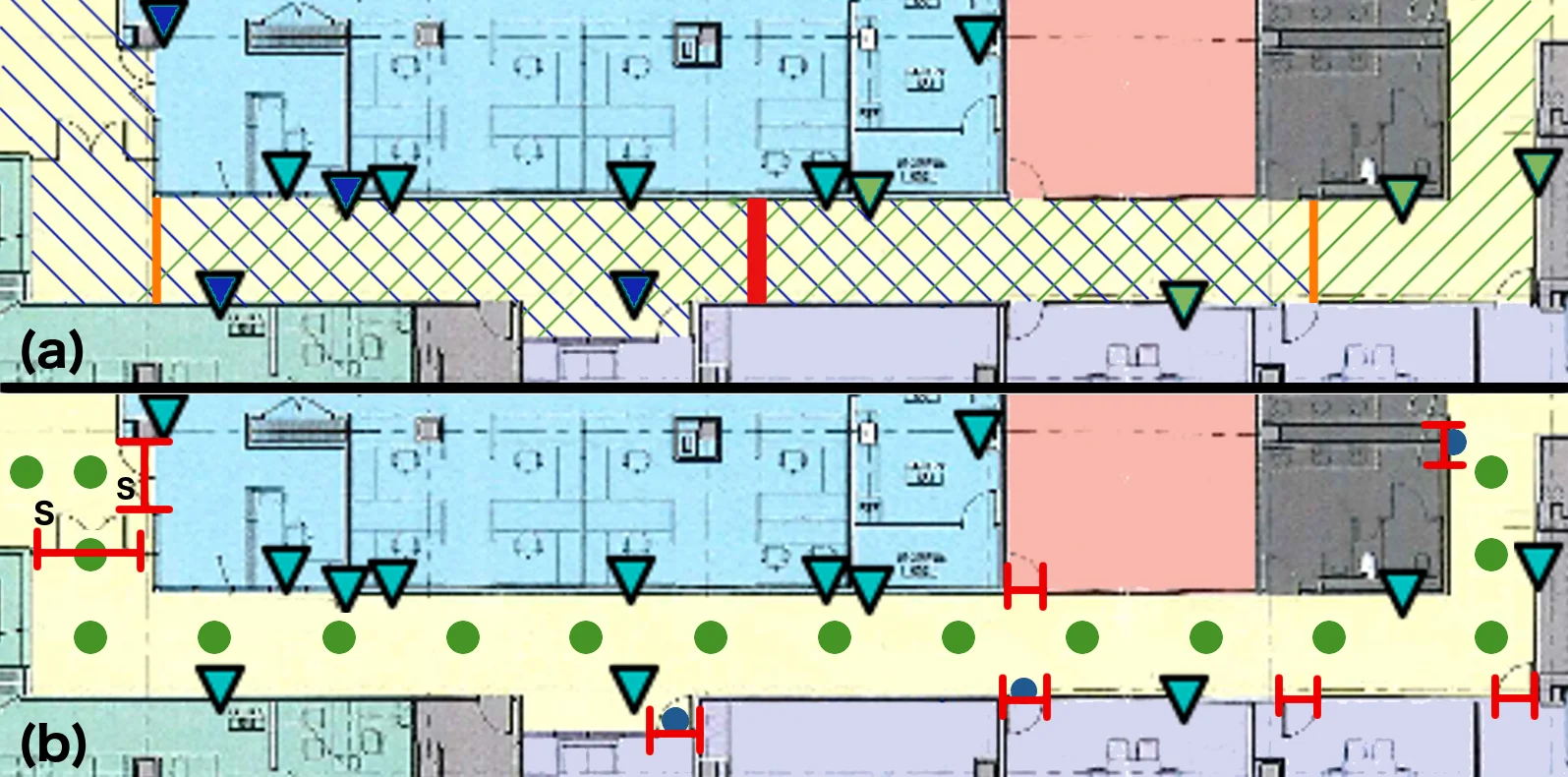

Our hybrid technique used both of these components to its advantage. We used Tango to map a floor or a portion of a floor as usual. We then "fingerprinted" the BLE beacon signals found within the mapped area via an offline mapping phase. When a user travels through the area, the ASSIST app will measure the current BLE beacon signals at the user's current position to determine which general area it is in. Once it determines the area, ASSIST will then select and activate the corresponding ADF. As the user travels from area to area, ASSIST will switch out the currently active ADF with the one that corresponds to the newly entered area.

This allowed us to map entire floors or buildings while maintaining Tango's centimeter-level precision.

People Detection and Map Annotations

We also experimented with additional components to provide further information to BLV users.

The "people recognition" portion focused on detecting other people via a wearable camera. The goal was to allow BLV users to determine if anyone else is around them — whether to ask people for assistance or for safety reasons. Our implementation sent images to a cloud server running a YOLOv2 model trained on the Hollywood Heads dataset, which would communicate back how many people are within the user's field-of-view. We also added a pre-trained facial recognition model to identify faces that may be familiar to the user. Our proof-of-concept used a GoPro Hero5 Session as our wearable camera.

The map annotation portion had us explicitly annotate the map with the locations of various static environmental characteristics (such as the locations of doorways and elevators). We then used these annotations to alert the user of these elements and incorporate them into navigation — such as to warn the user that they are approaching a doorway.

Visualization of map annotations on the floor plan of a long corridor in our testbed. Key: Triangles = beacons. (a) Red line = primary ADF border, orange lines = secondary ADF borders, diagonal lines = ADF coverage areas. (b) Green dots = navigation nodes, red "H" symbols = doorways, "S" = security door.

Client-Server Architecture

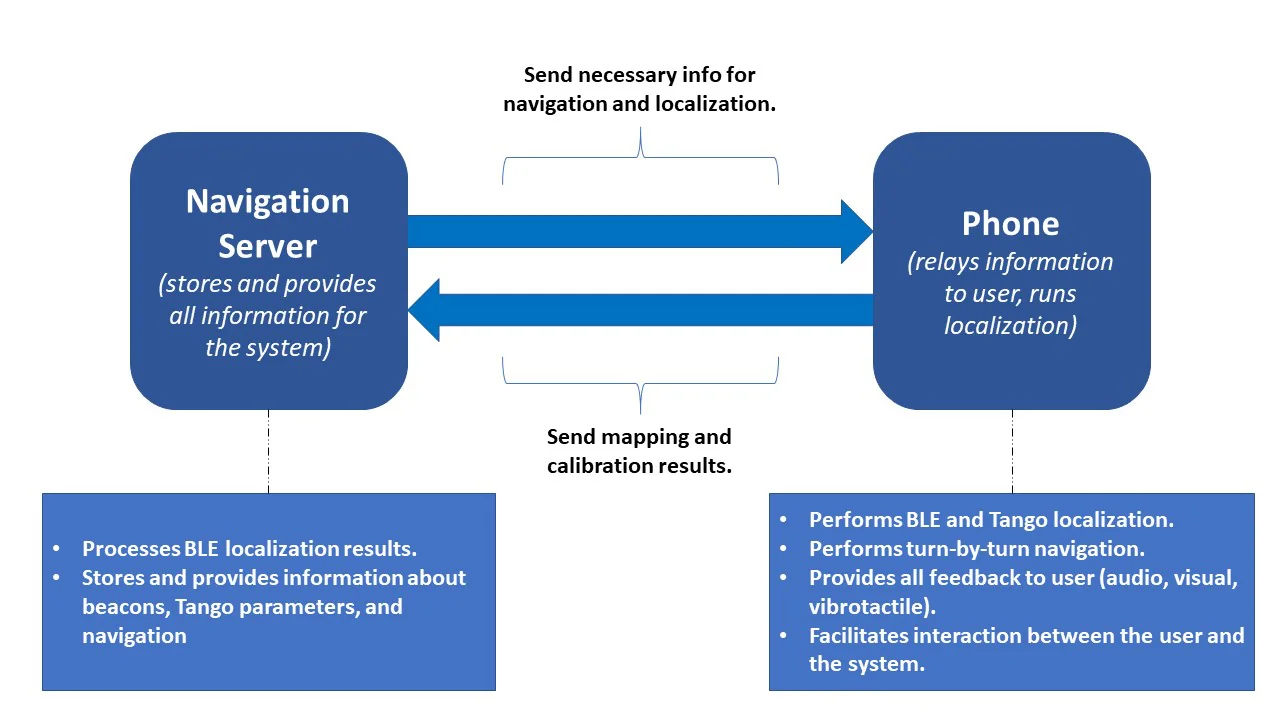

We implemented ASSIST via a client-server architecture. The server stored and provided all information for the system, including (but not limited to) processing BLE beacon localization results and Tango parameters. The actual device (the phone) interfaced with the user and provided them with turn-by-turn navigation and other feedback.

Illustration of ASSIST's client-server structure. ASSIST consisted of the navigation server (which stored all necessary information) and the mobile device (which interfaced with the user).

The detection server and body camera were separate components that integrated into the core flow.

User Testing, Implications, and Continuing Work

We performed two studies to evaluate ASSIST: One to assess user impressions of its usability, and another to assess users' navigation performance with the app. In brief: We found that, despite some bugs, participants had very positive impressions of the app. We also found that participants produced significantly fewer "mistakes" while navigating (bumps into onstacles, wrong turns, and other required interventions) when compared to not using ASSIST while navigating (and only using their preferred navigation aid).

ASSIST is part of a larger project that seeks to create a smart and accessible transportation hub featuring indoor navigation assistance alongside crowd analysis and other capabilities. It is the result of a collaboration between numerous institutions, including academic, industrial, and governmental entities, and has been the subject of many grants. Work has continued with ASSIST even after I graduated and left the project. In particular, some work has focused on implementing ASSIST's fine localization with more modern AR frameworks, such as ARCore (Android) and ARKit (iOS).