Introduction

Digital images play an essential role in everyday life. However, blind and low vision (BLV) users are naturally unable to perceive images via vision and must rely on other methods to do so. A common way of conveying images to BLV users involves using alternative descriptions (known as "alt text"), but alt text often suffers from quality issues and does not allow BLV users to form their own opinions about what an image conveys. Thus, researchers have looked at techniques to supplement alt text and offer BLV users the ability to perceive images more directly.

Touchscreen-based image exploration systems have been found to grant BLV users a strong sense of autonomy while exploring and help them better visualize what the image is showing. Exploring images via touch, however, requires far more effort than simply hearing a description: It often feels like shooting in the dark, and it is easy to miss important objects and information while exploring via touch.

In this project, we explore the pain points inherent in touchscreen-based image exploration systems, then design and test a suite of tools called ImageAssist to help scaffold the image exploration process for BLV users. ImageAssist guides users to important objects, gives users a sense of the image's most important features, and helps users zoom in on small, tightly clustered areas.

Formative Study

To identify the specific issues present in touchscreen-based image exploration systems, we performed a formative study where we built a smartphone-based image exploration app meant to recreate state-of-the-art approaches to exploring images via a touchscreen.

This formative study yielded three design goals for making touchscreen-based image exploration systems less frustrating:

- Provide a "table of contents" for the image so that users can decide for themselves what parts of the image are interesting.

- Guide users toward the "main characters" (or most important parts) of the image.

- Help users discover and survey small and tightly clustered areas.

Menu & Beacon Tool

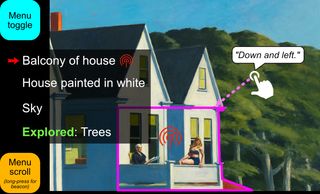

The menu & beacon tool announces all areas within an image using a simple audio menu and directs users to areas they are interested in via a looping audio beacon. The menu gives users a "table of contents" of the image, and the beacon allows users to quickly pinpoint specific objects without needing to pixel hunt (i.e., without needing to thoroughly search every pixel in the image).

The menu & beacon tool takes inspiration from prior work in navigation for BLV individuals within both physical world and video game environments (such as, for example, my prior work with NavStick and spatial awareness tools).

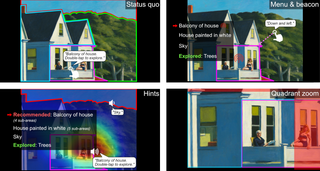

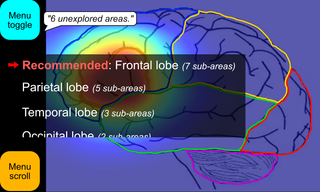

ImageAssist's menu & beacon tool. The menu lists all areas within the image, including those that the user has already explored. The beacon uses a looping sound effect and announcements to direct the user to a target area.

Hints Tool

The hints tool addresses the second design goal: It is designed to give users a sense of direction toward the most important parts of the image. The hints tool provides three utilities to the user: an enhanced menu, a prominence indicator, and a first touch indicator.

The enhanced menu is an augmented version of the audio menu from the menu & beacon tool that indicates "recommended" areas the user may want to explore. These recommendations give users the semantic prominence of areas within the image. For example, when surveying a floor plan, it is often easier for a user to understand the floor plan if they know where the entrance to the area is. They can then survey the areas within the floor plan relative to the entrance. In this case, the enhanced menu will surface the entrance or foyer first.

In other words, the enhanced menu highlights the logical "main character" of the image.

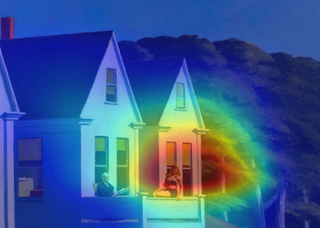

The prominence indicator changes the volume of spoken area names to correspond to how visually prominent the area is within the image. We ran our images through a residual neural network (ResNet-18) pre-trained on the ImageNet dataset. We then fed the network's activation outputs into ImageAssist for the prominence indicator.

Unlike the enhanced menu, the prominence indicator highlights the image's visual main character. In some images — such as a painting — the logical and visual main characters may be the same. In other images, however — such as floor plans — they may be different. In a floor plan, for example, the logical main character may be the entrance of the area, while the visual main character may be a large central gathering place or a room that is shaded differently.

The first touch indicator is a small sound effect that plays when a users touches an area for the first time. This indicator helps the user keep track of which areas they have already explored.

ImageAssist's hints tool. The heatmap superimposed on the underlying image communicates the most visually salient area of the image via volume changes during area name announcements. The enhanced menu communicates the most logically important areas within the image via recommended areas.

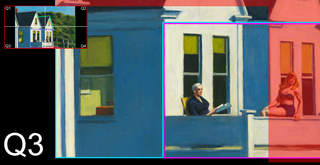

Quadrant Zoom Tool

The quadrant zoom tool allows users to zoom into a portion of the image — specifically, one of the image's four quadrants of the image. Users double-tap any quadrant using two fingers to zoom into that quadrant, and triple-tap with two fingers anywhere on the screen to zoom back out. Announcements accompany each action. When zoomed in, the user will only be able to "see" what lies within the current quadrant and will need to zoom out and zoom back into the other quadrant if they want to explore any objects that lie in those other quadrants.

ImageAssist's quadrant zoom tool. The image is broken up into four quadrants (labeled Q1 to Q4 within the inset at the top-left). In this image, the user has zoomed into the bottom-left quadrant (Q3) of the image. Red areas indicate parts of the image located in other quadrants.

User Study

With our user study, we wanted to investigate how well and in what ways our tools alleviated some of the bottlenecks inherent in touchscreen-based image exploration systems. We conducted this study with nine BLV participants and four different images: A painting, a stock photo, a historical photo, and a floor plan.

The four images used in our study. Clockwise from top-left: Painting (The Art of Painting by Johannes Vermeer), stock photo (a scene of a summer day on an urban greenway), floor plan of a generic condominium, and historical photo (a photo taken in the 1930s of a man holding up a newspaper).

Here, we report some notable findings from our study. Our academic publication below describes our study results in more detail.

Regarding the menu & beacon tool, we looked at how having a "table of contents" affected participants' behavior in exploring the image. We found that having this directory allowed them to obtain information about the image without needing to thoroughly explore every pixel, which by itself might imply that participants might have used the menu to explore the image instead of touching it. On the contrary, however, we found that participants tended to explore more areas within images when they had the menu & beacon tool than they did when they did not have the tool — a median of 88% of areas touched when using the baseline tool vs. 100% when using the menu & beacon. This indicates that participants saw the menu as supplementing touch exploration rather than replacing it. (Note that, in our study, participants could cease exploring the image whenever they wanted.)

Regarding the hints tool, we found that participants felt that the prominence indicator made them think about images differently. One participant talked about how current representations for communicating images — such as alt text and captions — were "confining" for BLV users. They went on to say:

[H]aving a tool like [the prominence indicator] is actually pretty neat, because it gives you the insight to understand what other people might be seeing, while also telling you how things are laid out so you can see things for yourself.

This quote indicates that allowing users to explore and not confining them to simplistic representations allows them to appreciate images even more than they otherwise would have.

Finally, regarding the quadrant zoom tool, participants' opinions were split. Some participants — especially those with small phone screens — found zooming to be incredibly helpful for surveying small areas. Some others, however, found quadrant zooming to be difficult to use by itself. Even with those small areas enlarged, they still needed some explicit guidance to explore them.

A heatmap representing visual saliency is superimposed on Second Story Sunlight by Edward Hopper (1960), which is a painting we used as a trial image in our user study. Using this heatmap, BLV users can now quickly identify the parts of the image that draw sighted people's attention.

Applications

ImageAssist can help BLV people explore and understand many important types of images.

One opportunity is in STEM education for BLV students. With the increasing availability of touchscreen devices, tools like ImageAssist can make scientific diagrams more accessible and informative to BLV STEM students. Indeed, some of our participants were vocal about how a system like ImageAssist could be leveraged by teachers of students with vision impairments (TVI) to provide a better understanding of concepts to students.

Depiction of ImageAssist within an educational context. With ImageAssist, BLV students can more deeply understand scientific diagrams, such as this diagram of a human brain.

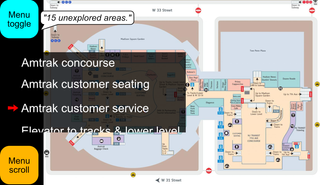

Another context is in map exploration. ImageAssist could make the process of exploring maps of physical world environments within a "rehearsal" context easier — that is, before physically navigating through these environments. A map-oriented version of ImageAssist could additionally communicate important information, such as the size, shape, and distance between parts of the map.

Depiction of ImageAssist within a navigation context. A map-oriented version of ImageAssist can allow BLV users to better survey and explore floor plans and maps, such as this one of Penn Station in New York City.

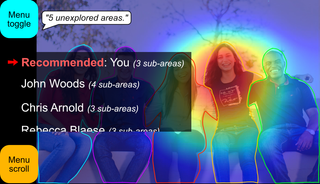

A final context is social media. Tools like ImageAssist can allow BLV users to even more closely explore and learn about images they come across on social media, especially photos that may have personal value. Indeed, some participants recounted times when they appreciated having the ability to deeply explore images with existing tools such as Microsoft's Seeing AI:

There was an image — I was with my family. We went to New York, and we took a picture of the Brooklyn Bridge together. And then I explored the image [using Seeing AI] and I found out there were buildings behind us — and the sky, clouds, and things like that. So I really liked having that extra info.

Depiction of ImageAssist within a social media context. Using ImageAssist, BLV users can more deeply explore images that may have personal value to them, such as this group photo.